기술

The Evolution of Computer Vision And Why the Future Belongs to the Edge

Discover how computer vision evolved from handcrafted algorithms to multimodal AI and why the future of visual intelligence is moving to the edge.

Reading Time

0 Minutes

목차

Expand

Authored By

칼린 치오바누

공동 설립자 겸 CTO

Computer vision has evolved more in the last 15 years than in the previous 50.

From handcrafted algorithms to multimodal AI models capable of interpreting both text and imagery, we’ve moved from teaching machines to “see” to enabling them to “understand.”

But this evolution hasn’t been linear it’s happened in distinct stages. And understanding that journey helps explain where the next breakthroughs will come from.

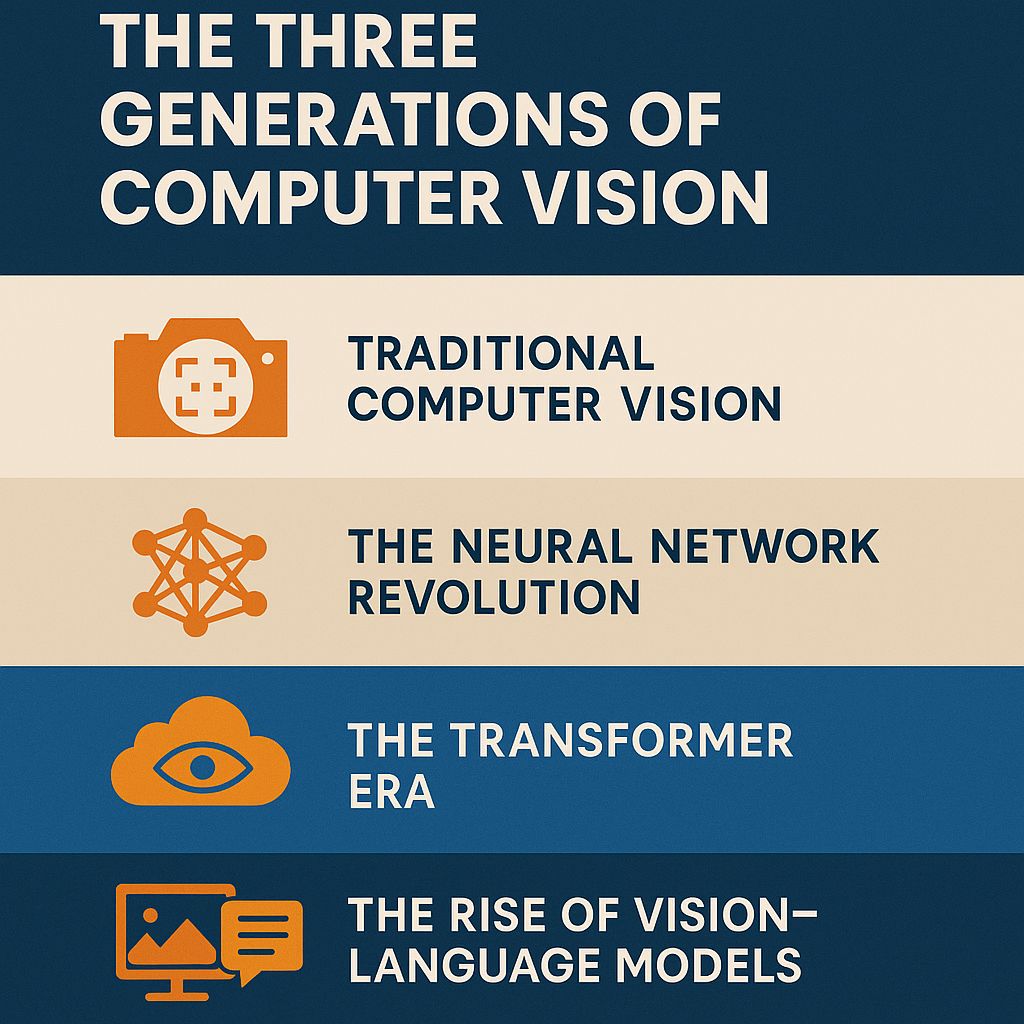

The Three (and a Half) Generations of Computer Vision

1. Traditional Computer Vision (Pre-2010)

Before deep learning, computer vision was dominated by handcrafted features and mathematical heuristics.

Engineers relied on predictable, explainable algorithms edge detection, segmentation, statistical pattern recognition that could be designed and implemented mathematically.

These systems worked, but only in narrow conditions.

They lacked adaptability and struggled to generalize beyond what they were explicitly programmed to see.

2. The Neural Network Revolution (2010–2020)

Everything changed around 2012 with ImageNet and AlexNet.

This was when Geoffrey Hinton, Fei-Fei Li, and other researchers proved that neural networks could outperform traditional algorithms by a wide margin.

The world shifted to Convolutional Neural Networks (CNNs) lightweight, flexible architectures capable of detecting, recognizing, and classifying images across millions of categories.

CNNs brought computer vision to phones, cars, and retail cameras. They made AI practical.

3. The Transformer Era (2020–Today)

Then came transformers first in language (Attention Is All You Need, 2017), then in vision.

Transformers can outperform CNNs in accuracy and flexibility, but at a cost: they are computationally heavy, memory-intensive, and dependent on powerful GPUs.

Despite that, they’ve become the backbone of the latest Vision Transformers (ViTs), powering everything from autonomous vehicles to large-scale image analytics.

3.5. The Rise of Vision-Language Models

The newest frontier combines vision and language.

These multimodal models can look at an image and describe it in words or generate new images from text.

They’re incredibly capable, but also incredibly heavy. Running them at scale requires massive compute power, which brings us to the most exciting direction today: edge computing.

Why the Future of Computer Vision Is on the Edge

The next big challenge is no longer about making AI smarter, it's about making it smaller.

Over the last year, Apple, Samsung, Meta, and Microsoft have all published papers showing how large models can be compressed to run locally on phones.

This is the shift I find most exciting because it directly intersects with what we’re building at OmniShelf.

At OmniShelf, we’ve managed to compress and optimize computer vision models so efficiently that they can run on hardware equivalent to a Samsung S7 while maintaining over 95% recognition accuracy.

That means scanning hundreds of products on a shelf, live, in under 15 seconds.

The implications for retail are huge.

We can deliver real-time insights without relying on cloud processing reducing latency, cost, and data transfer, all while improving privacy and resilience.

Data: The Real Limiting Factor

Even with all this progress, one limitation remains: data quality.

Computer vision models, no matter how advanced, don’t have common sense.

They operate on probability so if the data is biased or poor quality, the results will be too.

That’s why at OmniShelf, our focus isn’t only on the model architecture but also on domain-specific, high-quality data pipelines. Because the smarter the data, the smarter the AI.

Looking Ahead

We’re entering an era where AI doesn’t just live in the cloud it lives in the devices around us.

Computer vision is becoming distributed, efficient, and context-aware.

For me, that’s what makes this field so fascinating: we’re finally reaching a point where machines can “see” as fast as we can and increasingly, right where we are.

At OmniShelf, we’re extending the frontier of computer vision by making cutting-edge AI accessible at the edge. Stay tuned the next phase of visual intelligence is happening right on the shelf.

Sources and Further Reading

This article refers to several key research papers and concepts that define the modern era of computer vision:

- ImageNet and AlexNet:

- Krizhevsky, A., Sutskever, I., & Hinton, G. E. (2012). ImageNet Classification with Deep Convolutional Neural Networks. Advances in Neural Information Processing Systems (NIPS). This paper demonstrated the power of deep CNNs, winning the ImageNet challenge and igniting the deep learning revolution.

- The Transformer Architecture:

- Vaswani, A., Shazeer, N., Parmar, N., Uszkoreit, J., Jones, L., Gomez, A. N., Kaiser, Ł., & Polosukhin, I. (2017). Attention Is All You Need. Advances in Neural Information Processing Systems (NIPS). The foundational paper introducing the Transformer, which replaced recurrent and convolutional layers with the attention mechanism.

- Vision Transformers (ViTs):

- Dosovitskiy, A., Beyer, L., Kolesnikov, A., Weissenborn, D., Zhai, X., Unterthiner, T., Dehghani, M., Minderer, S., Heigold, G., Gelly, S., Uszkoreit, J., & Houlsby, N. (2021). An Image Is Worth 16x16 Words: Transformers for Image Recognition at Scale. International Conference on Learning Representations (ICLR). This work adapted the Transformer architecture for images, treating patches as sequence tokens.

- Model Compression and Edge AI:

- Han, S., Pool, J., Tran, J., & Dally, W. J. (2015). Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. International Conference on Learning Representations (ICLR). A seminal paper on reducing model size for deployment on resource-constrained devices.

- General principles of Model Compression Techniques (including pruning, quantization, and knowledge distillation) are now widely discussed in industry publications focused on Edge AI deployment.

인사이트 및 업데이트

OmniShelf 블로그에서 더 자세히 살펴보기

심층적인 인사이트, 제품 업데이트, 소매 기술의 미래를 형성하는 업계 동향으로 시대를 앞서가세요.비즈니스에 중요한 스토리를 더 찾아보세요.